Note

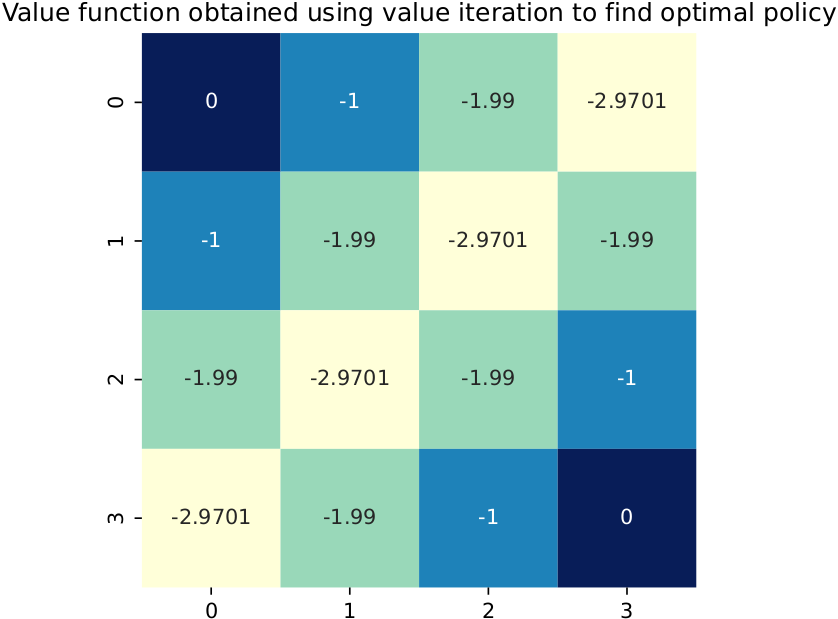

The example uses the code and visualization tools you will work with in the course. You will implement the Q-learning algorithm in Exercise 11: Linear methods and n-step estimation.

About this course#

Fundamentally, the course is about understanding and implementing method for control and learning such as Q-learning:

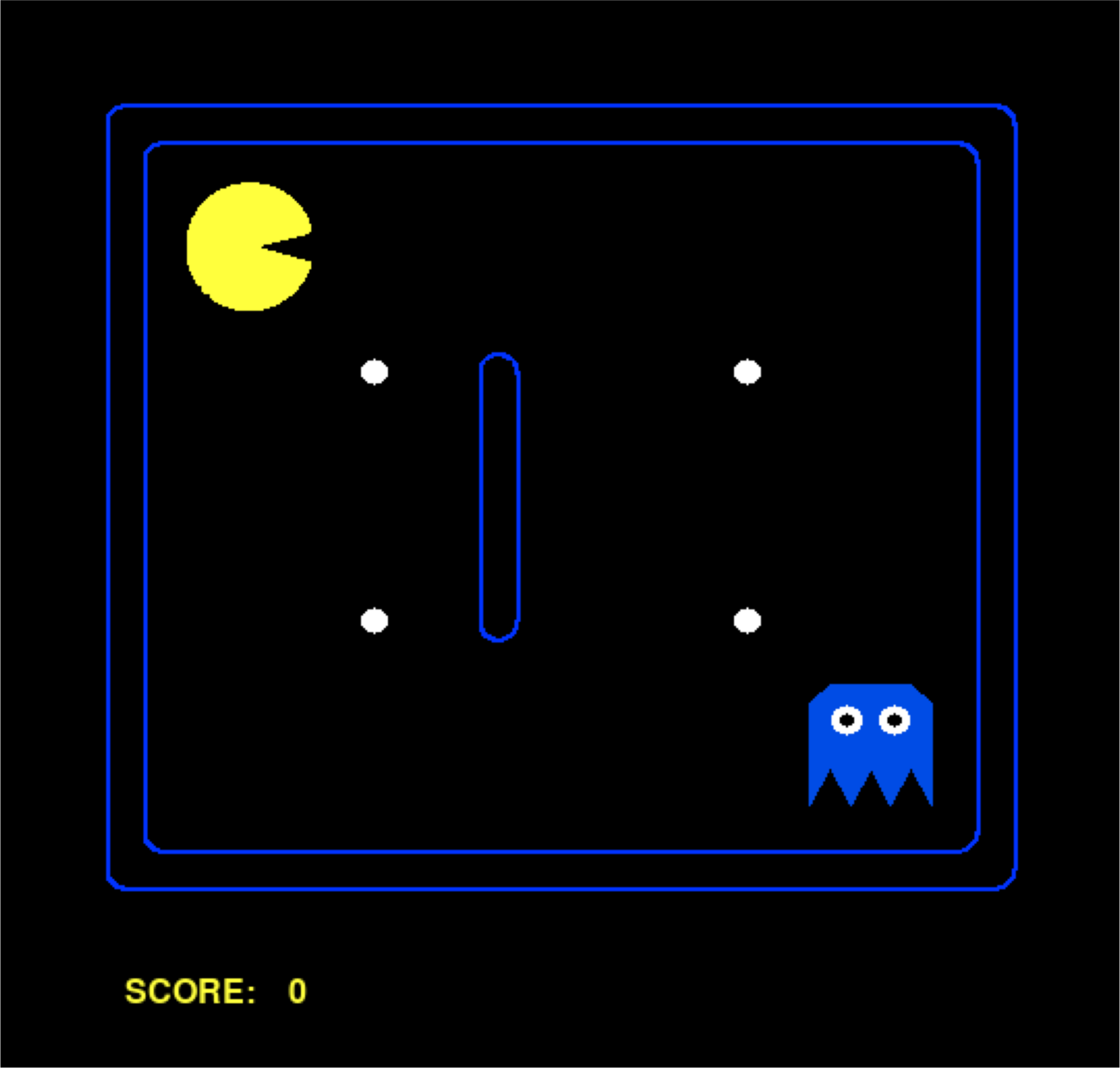

In the example, Pacman must learn to navigate from the bottom-left square to the upper-right square (associated with a reward of +1) while avoiding the square below it (associated with a negative reward of -1). Q-learning accomplish this by keeping track of how good each of the four possible actions are in each square, here shown by the numbers and colors, and then use an update rule to modify the numbers.

What you learn#

See also

Reinforcement learning techniques that build on what you learn in this course was used to train ChatGPT to follow instructions.

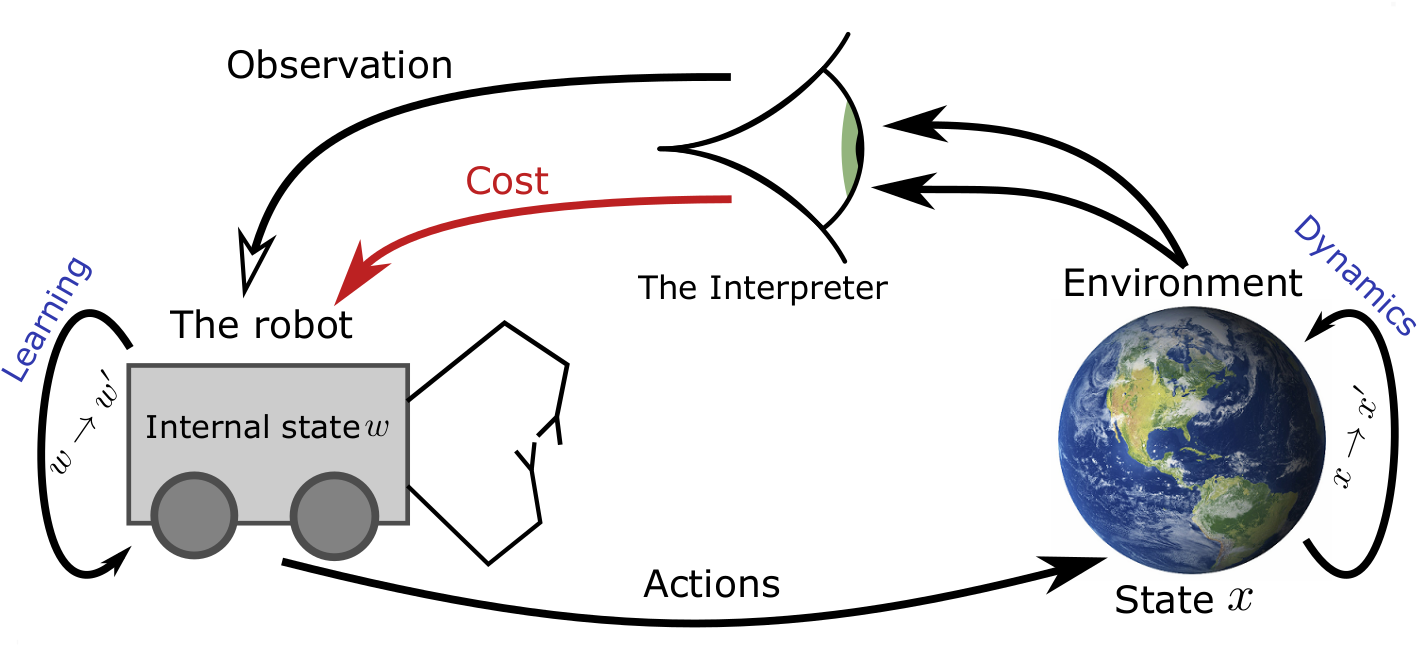

The course provides an introduction to both reinforcement learning and control theory. These subjects attempts to solve the same fundamental problem namely how an agent which interacts with an environment should plan decisions so as to maximize a future reward:

This problem occurs in many areas of modern AI and engineering. For instance, reinforcement learning emphasizes how to learn the problem and assumes very little is known initially, whereas control theory assumes much is known initially and this information should be exploited in the planning.

We approach control and reinforcement learning from three perspectives; the first (Dynamical programming) will give you the fundamental theory that the other two builds upon (and which also forms the basis of much traditional AI research and computer algorithms such as search), and in the two other, control and reinforcement learning, we will apply this knowledge to algorithms of immense utility in modern engineering and AI.

This teaches you the underlying, exact planning algorithm that lies at the basis of control theory and reinforcement learning. Asides these uses, dynamical programming can be used to derive modern search algorithm, and algorithms for games with several decision-makers.

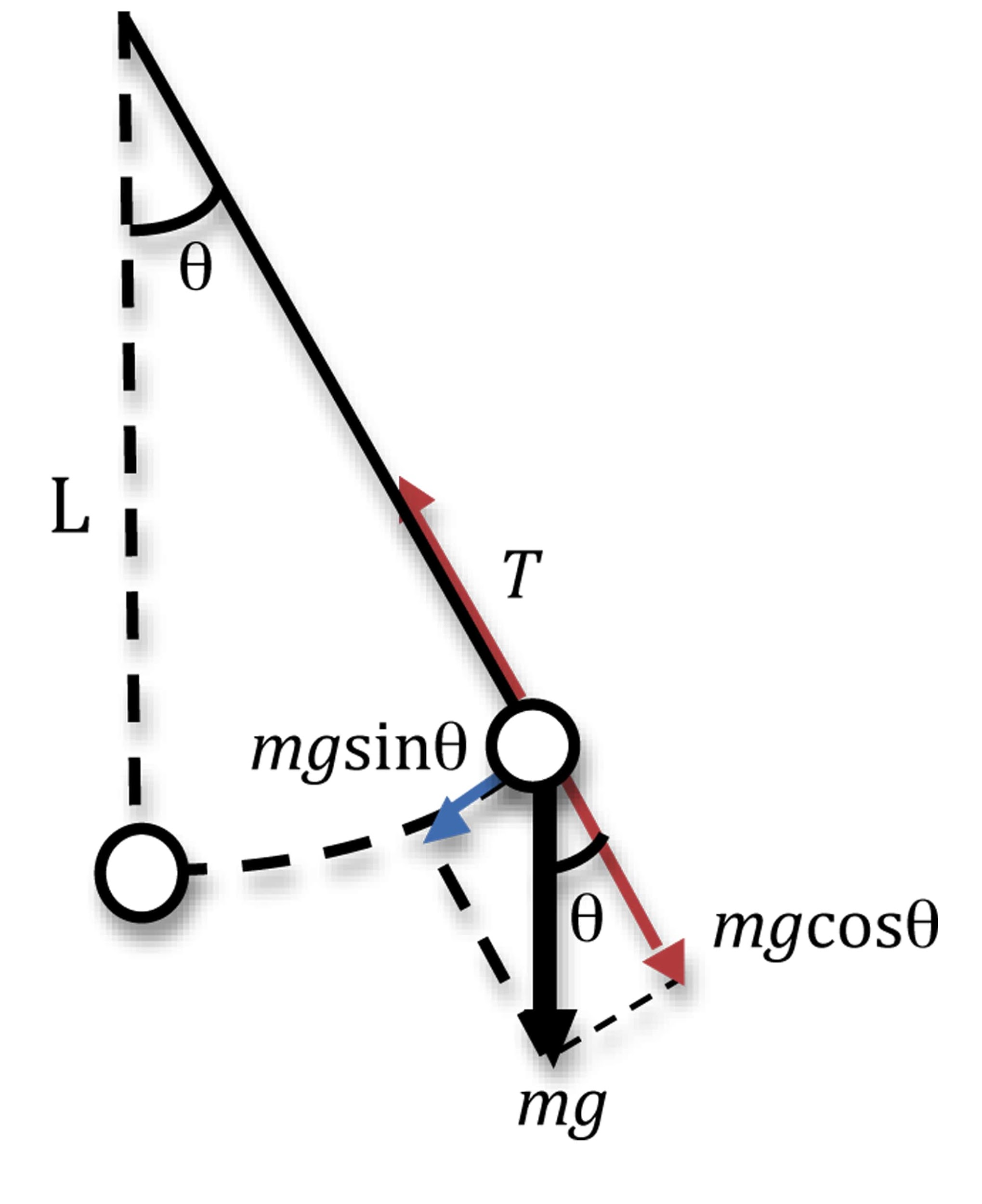

This section introduces environments where the time is continuous. You will deal with simulation, discretization, and popular methods for exact and approximate control such as LQR, PID and Collocation-based methods.

This section applies the dynamical programing algorithm to a setting where the environment is not know and must be learned. In this section, you will learn about the most popular reinforcement learning methods such as Q-learning, Sarsa, eligibility-trace based methods and deep reinforcement learning.

Intended Audience#

All students who are interested in modern AI! If you are curious about taking the course, I recommend that you check out the practical information and look at course material.

I estimate that about 3/4 of the students who attend this course are 4th semester KID students. However, the pre-requisites of the course will be met by most bachelor-level students at DTU. For more information please see Pre-requisites.